Mistral AI continue to innovate, they recently launched a new range of language models called Ministral. These models, Ministral 3B and Ministral 8B, are designed for embedded computing and high-end applications, revolutionizing the way AI can be used on various devices.

Listen to the AI podcast :

Main features of Ministral models

Both models are optimized to run on state-of-the-art devices such as smartphones and laptops.

This enables real-time processing without relying on cloud infrastructure, which is particularly advantageous for applications requiring low latency and greater confidentiality. (Sensitive data remains local)

Performance

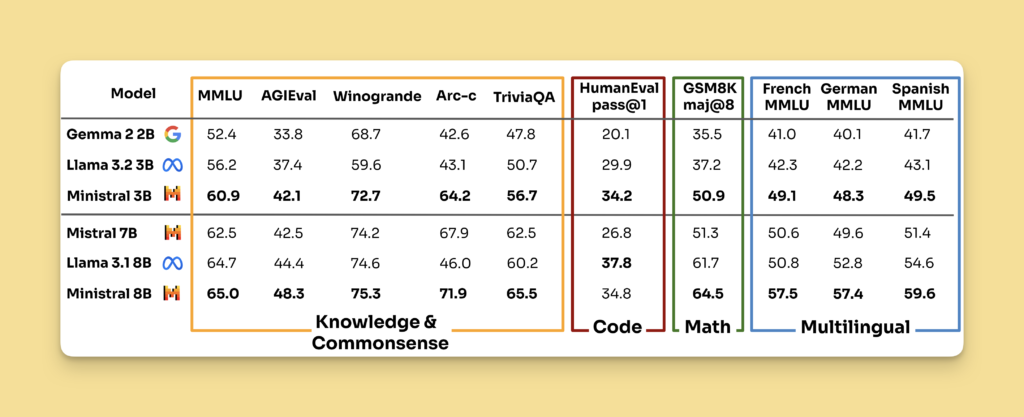

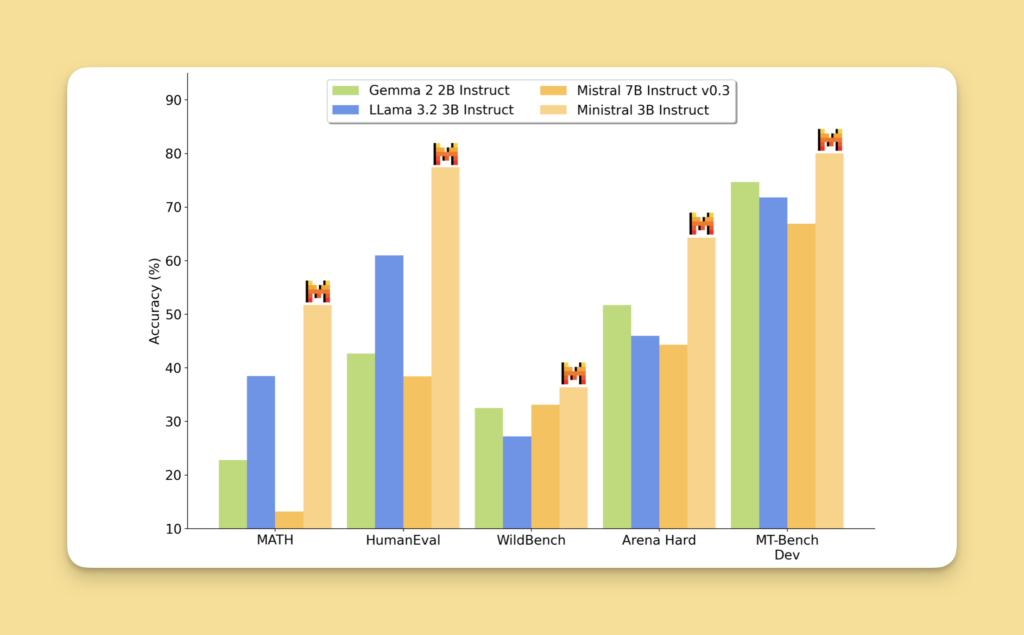

Despite their smaller size compared to traditional large language templates, Ministral templates offer impressive performance.

The Ministral 3B model, with 3 billion parameters, outperforms Mistral’s previous 7 billion-parameter model in various benchmarks.

The larger Ministral 8B model competes effectively with much larger models.

Context length

Both models support a remarkable context length of up to 128 000 tokens, enabling extended document analysis and summarization capabilities.

Innovative architecture

The Ministral 8B uses a mechanism called “attention interleaved sliding-window”, which improves its ability to handle long sequences of text more efficiently than conventional models.

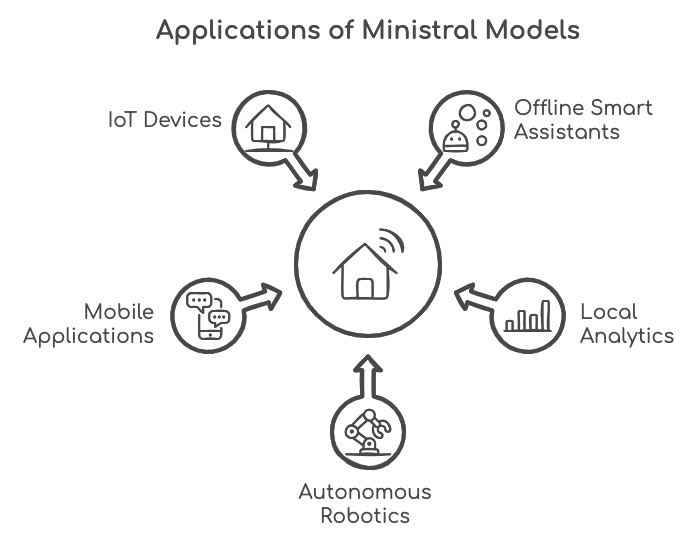

Applications of Ministral templates

Here are some examples of practical applications of the Ministral 3B and Ministral 8B models in everyday appliances :

On-device translation : Facilitating real-time language translation on smartphones and tablets.

Smart offline assistants : Enable devices such as smart speakers and personal assistants to respond to user queries and manage tasks without relying on cloud services.

Local analytics : Process data locally to provide immediate insights and recommendations without sending sensitive information to external servers.5

Autonomous robotics : Integration into autonomous systems, such as drones or factory robots, enabling them to make decisions in real time.6

Mobile applications : Ideal for mobile applications requiring natural language processing capabilities, such as chatbots, text summarization and content generation.6

IoT devices : Improve functionality such as voice recognition and local command execution.7

Environmental considerations

Mistral AI has positioned its models as environmentally friendly alternatives.

By focusing on efficiency and reducing the computational resources required for large language models, Mistral aims to mitigate the environmental impact associated with traditional AI technologies.

Availability and pricing

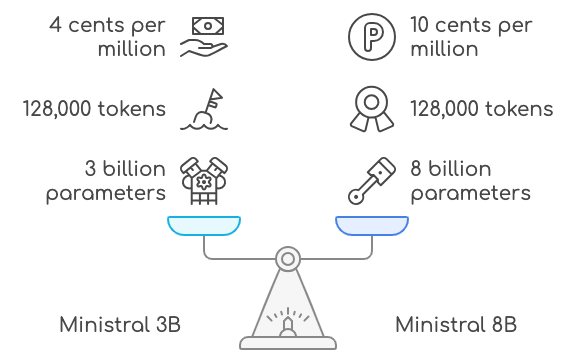

Both Ministral models are available via Mistral’s cloud platform for commercial use, with specific pricing structures based on token usage.

The company also offers self-deployment options under commercial licenses.

The price is10 cents per million tokens for the 8B version and 4 cents for the 3B version. A research license is also available.

Comparison of Ministral models

| Model | Size (billions of parameters) | Context length (tokens) | Price (per million tokens) |

| Ministral 3B | 3 | 128,000 | 4 cents |

| Ministral 8B | 8 | 128,000 | 10 cents |

Learn more about Mistral AI with our other articles:

- Mistral Large vs Chat GPT: How the French language model works and its advantages

- Mixtral: The French ChatGPT ?

The Ministral models represent a significant advance in embedded AI technology, offering powerful capabilities while addressing privacy and environmental concerns.

Their ability to run on state-of-the-art devices opens up a wide range of new possibilities for AI applications, bringing AI closer to the end user and revolutionizing the way we interact with technology.

Related Articles

Frontier: OpenAI Hires Your Replacement. It Doesn’t Sleep and Costs Less Than You.

“Hire your first AI coworkers,” “Onboard them to your company,” “Performance reviews for agents.” When OpenAI unveiled Frontier, its new enterprise platform announced on February 5, 2026, the language left…

AGI 2026-2027: The 6 Opposing Visions of Altman, Musk, Amodei, Zuckerberg, LeCun, and Hassabis

Six leaders, six colossal fortunes, six irreconcilable visions of what artificial general intelligence (AGI) will become. Who is right? The answer to this question is worth trillions of dollars and…