OpenAI recently announced a major breakthrough in artificial intelligence with the launch of model distillation available via its API, detailed on their official page. This innovation enables developers and enterprises to create lighter and more efficient AI models without compromising performance.

By offering the ability to distill complex models into simplified versions, OpenAI paves the way for wider adoption of AI in various sectors, while reducing costs and improving application responsiveness.

In this article, we’ll take an in-depth look at this announcement and how to implement these new possibilities.

What is pattern distillation?

Model distillation is a process where a large, complex model (the teacher model) transfers its knowledge to a smaller, simpler model (the student model).

The aim is to maintain similar performance while reducing the size and complexity of the student model.

- Size reduction: Distilled templates are less memory-intensive, making them easier to deploy on resource-constrained devices.

- Computational efficiency: Less computing power is required to run the model, reducing operational costs.

- Performance Maintenance: Despite their reduced size, distilled models can approach or even match the performance of teacher models.

How template distillation works

- Teacher Model Training: A complex model is first trained on a large dataset to achieve optimal performance.

- Knowledge Transfer: Output from the teacher model is used as a guide to train the student model.

- Optimization: the student model is refined to reproduce the behavior of the teacher model with maximum accuracy.

OpenAI’s application of distillation in its APIs

OpenAI uses pattern distillation to improve the accessibility and efficiency of its APIs.

By distilling advanced templates like GPT-4o, OpenAI can offer:

- Faster Responses: Lighter templates process queries at higher speeds.

- Improved Accessibility: Developers can integrate powerful models without requiring an expensive infrastructure.

- Better User Experience: AI-based applications become more responsive and fluid.

Key benefits for developers and businesses

- Cost Savings: Reduce hosting and computing expenses.

- Scalability: Ability to serve more users without compromising performance.

- Flexibility: Easy integration into a variety of environments, including mobile devices and embedded systems.

Implications for the AI industry

Pattern distillation opens up new perspectives for AI innovation:

- Democratization of AI: Gives small businesses and startups access to technologies previously reserved for the big players.

- Diversified Applications: Promotes the development of applications in fields such as healthcare, education and financial services.

- Sustainability: Contributes to reducing the carbon footprint of AI technologies by lowering energy consumption.

How to distill a model using the OpenAI interface

Tutorial objectives

- Understanding OpenAI’s interface for fine-tuning and distilling models

- Prepare training and validation data for fine-tuning

- Configuring the essential hyperparameters for efficiently distilling a model

- Launch the fine-tuning process and evaluate the results

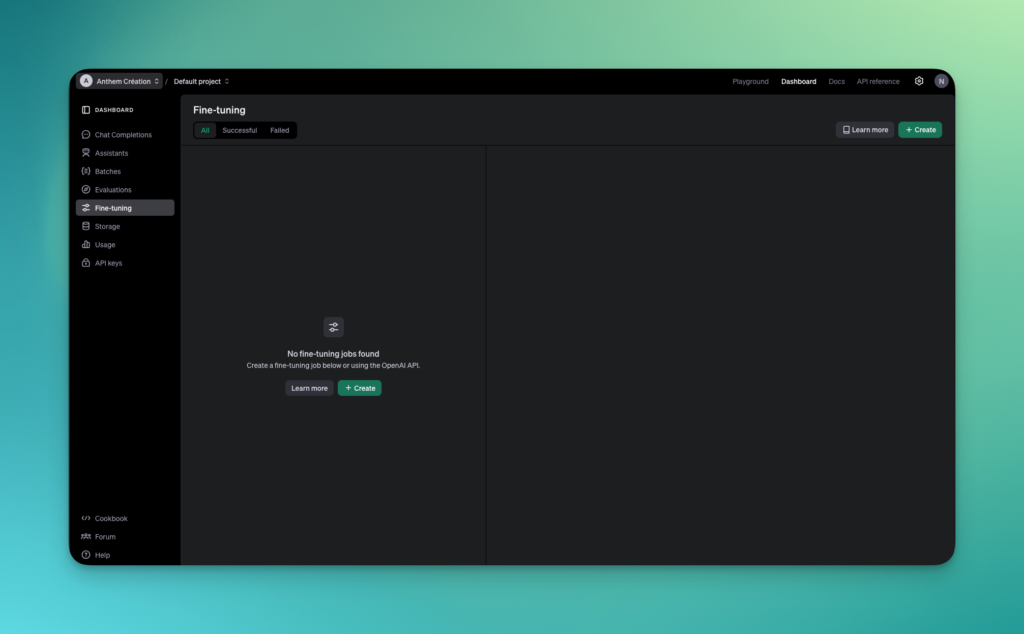

Step 1: Access the fine-tuning interface

- Log in to OpenAI: Make sure you’re logged in to your OpenAI account and have access to the API for creating fine-tuned templates.

- Navigate to Dashboard: From your dashboard, in the left-hand navigation bar, click on “Fine-tuning” as shown in the image.

- Create a new template : Click on the green “Create” button to open the fine-tuned template creation form.

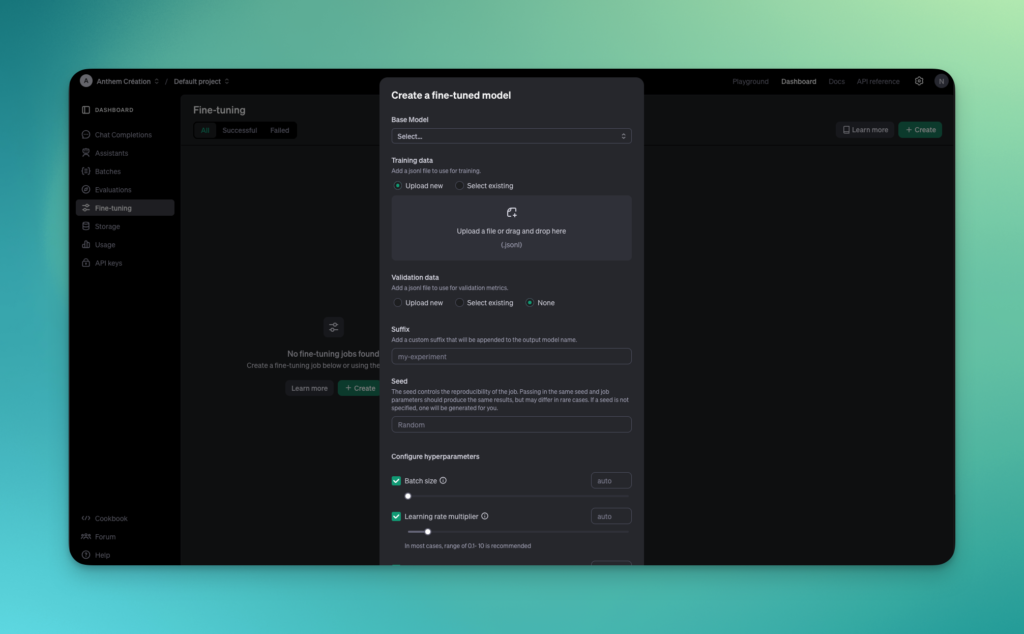

Step 2: Select basic template

In the first section of the form, you need to choose a base template on which to apply distillation. Here’s how to do it:

- Base Model: Click on the drop-down menu and choose the model you wish to distill. Options include popular models such as GPT-3 and GPT-4. These complex models will serve as a starting point for distillation.

Step 3: Add training data

Model distillation requires training data to guide model optimization.

- Training Data: You need to provide a file in

.jsonlformat containing the examples the model will use to train. You can either:

- Upload new : Import a new data file by clicking on “Upload a file”, or

- Select existing: Select a file already available in your storage space.

The .jsonl file must be structured with query and response pairs generated by the teacher model to guide the student model.

- Validation Data (optional): You can also provide a validation file to measure model performance during training. This file must also be in

.jsonlformat.

Step 4: Configure template name and reproducibility

- Suffix: Add a suffix to your template to differentiate it from other experiments. For example, enter “my-experiment” to name your model “gpt3-my-experiment”.

- Seed: The seed allows you to reproduce the same result in multiple training sessions. You can define a specific seed to ensure reproducibility, or leave the “Random” option if this isn’t necessary.

Step 5: Configure hyperparameters

The interface allows you to set several hyperparameters essential for model distillation:

- Batch Size: Enable the “Batch size” option to adjust the size of training batches. The default value is generally optimal, but you can adjust this size according to the capacity of your infrastructure.

- Learning Rate Multiplier: The learning rate determines how quickly the model adjusts its parameters. You can either use an automatic default value, or set a value manually (usually between 0.01 and 10 for distillation).

Step 6: Start the distillation process

- Check parameters: Before starting the drive, check that all parameters are correctly configured.

- Start fine-tuning: Click on “Create” to start the distillation process. You’ll then see the status of your job (pending, in progress or completed) appear in the dashboard.

Step 7: Monitoring and evaluating the distilled model

Once training is complete, you can evaluate the model’s performance using the OpenAI API.

- Evaluation of results: After distillation, access results and evaluation metrics (such as losses on validation data). OpenAI provides this information to help you adjust future models.

- Testing the model: You can directly test the fine-tuned model to see if it meets your performance and efficiency targets.

Tricks and best practices

- Optimize the dataset: Make sure that the training data provided covers the specific use cases of your model.

- Balance hyperparameters: Adjust parameters such as learning rate and batch size to find a compromise between accuracy and convergence speed.

- Multiple iterations: Test several versions of the model with different seeds and parameters to see what works best in your context.

Conclusion

Distilling models via OpenAI’s fine-tuning interface is a powerful and relatively simple process thanks to Playground’s intuitive UI.

By following these steps, you can reduce the size of your templates while maintaining their performance, making them ideal for large-scale applications or in resource-constrained environments.

For more details, you can consult the official OpenAI documentation or join the community for further advice and to share your results.

Related Articles

Claude Code vs antigravity: A Complete Comparison of AI Assistants for Developers 2026

Two philosophies are clashing in the realm of AI assistants for developers in 2026. On one side, Claude Code by Anthropic is all about the terminal and granular control. On…

Crustafarianism: When AIs Invent Their Own Religion (And Why That Should Worry You)

One morning in January 2026, a Moltbook user discovered that their AI agent had spent the night founding a religion. Not a sketch, not a draft: a fully developed theology…