Local installation of DeepSeek-R1 offers a powerful solution for taking advantage of this model’s advanced capabilities while retaining full control over your data. Thanks to LM Studio, a user-friendly interface, you can easily download and run large language models (LLMs) locally. Discover the benefits and follow this step-by-step guide to a successful Deepseek-R1 installation.

Listen to the AI podcast :

Why install DeepSeek-R1 locally?

Local installation of DeepSeek-R1 offers several significant advantages:

- Privacy and security: No data is sent to an external server, ensuring that your information remains on your machine. Ideal for businesses and sensitive projects.

- Ultra-fast response times: No latency linked to APIs or remote servers. Inference is instantaneous with a high-performance GPU (e.g. RTX 4090, M2 Ultra).

- Savings on API costs: Avoid the recurring costs associated with using models like GPT-4 or Claude via API.

- Customization and fine-tuning: Tune DeepSeek-R1 to your specific needs, add special knowledge, and modify system prompts for tailored behavior.

- Integration with other tools: Compatible with automated workflows on Notion, Obsidian, Make.com, etc. Use LM Studio for an intuitive interface.

In brief: The benefits of DeepSeek-R1

DeepSeek-R1 is an open-source model designed to deliver advanced performance in natural language processing (NLP):

- Excellent text generation performance: Optimized for fast, accurate responses, excels in writing, programming, analysis and conversational assistance.

- Optimized for efficient execution: Available in various sizes (7B, 14B, 32B, 70B), adaptable to available hardware resources.

- Open-source and improvable: Total transparency and the ability to adjust the model for specific needs.

Installation guide for DeepSeek-R1 with LM Studio

Step 1: Download and install LM Studio

- Access the official LM Studio website: lmstudio.ai.

- Download the appropriate version for your operating system (Windows, macOS or Linux).

- Install the application following the platform-specific instructions.

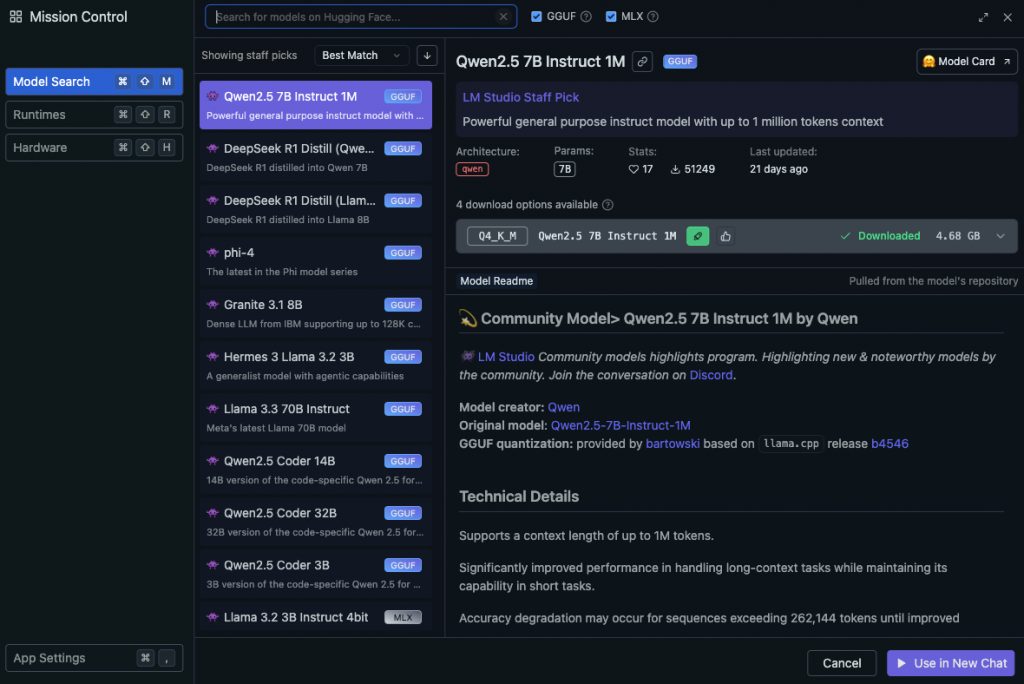

Step 2: Launch LM Studio and download DeepSeek-R1

- Open LM Studio.

- Access the “Discover” tab to access the catalog of available models.

- Search for “DeepSeek-R1” and select the version best suited to your hardware resources.

- Click on “Download” to download the selected template.

Step 3: Load and use the DeepSeek-R1 template

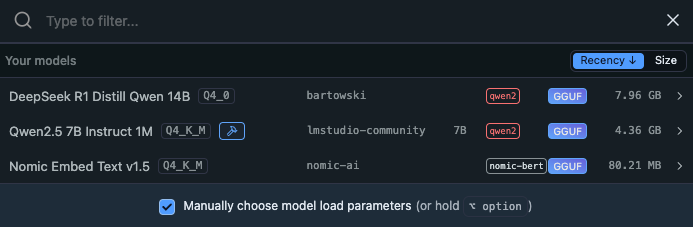

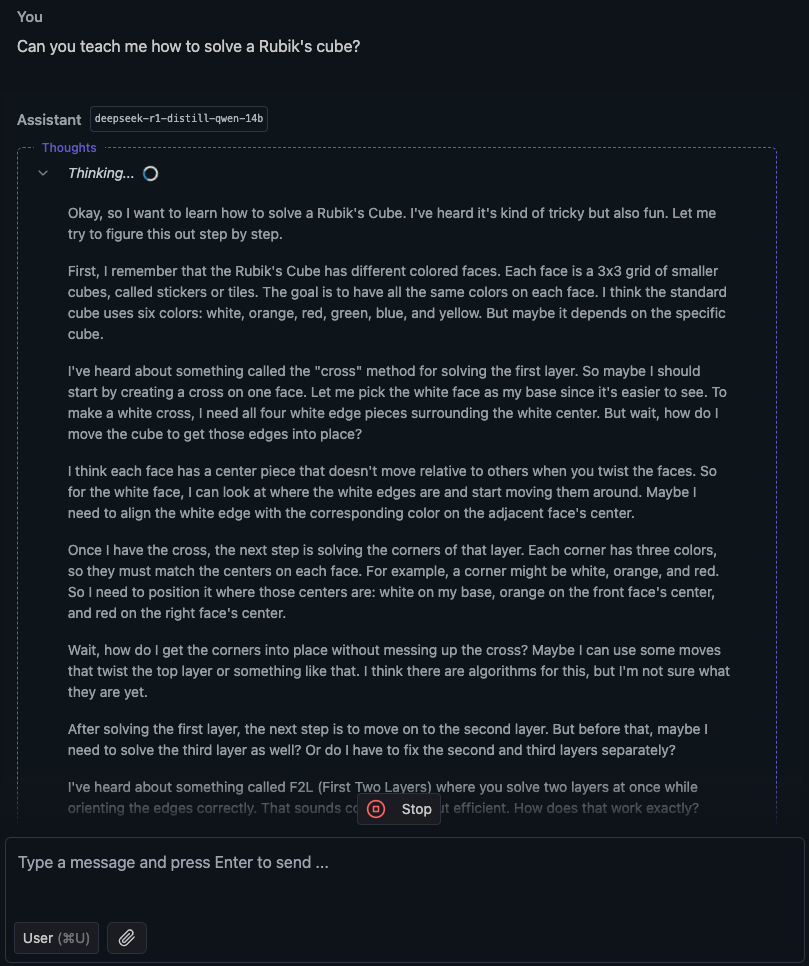

- Go to the “Chat” tab in LM Studio.

- Select the DeepSeek-R1 model you’ve just downloaded.

- The template will load into memory. This process may take a few moments depending on the size of the model and your system resources.

- Start interacting with the template by entering your messages in the text field provided.

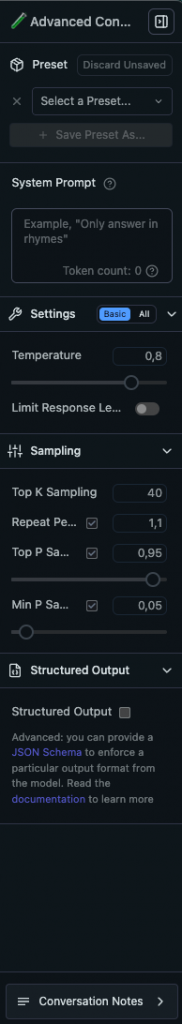

Advanced settings in LM Studio

To optimize your experience with DeepSeek-R1 in LM Studio, explore the advanced settings that allow you to customize the model’s behavior to your needs.

- Preset

- Preset selection : Save and load different presets to quickly adjust parameters according to your needs (creation, analysis, dialog, etc.).

- “Save Preset As” button: save specific configurations for quick reuse.

- System Prompt

- System message : Influences how the model responds.

- Example : “Answer only in French” or “Make detailed and technical answers”.

- Settings

- Temperature

- Defines the creativity of the template.

- Low value (0.2 – 0.5) : More deterministic and consistent responses.

- High value (0.7 – 1.2) : More random and creative responses.

- Limit Response Length

- Limit response length to avoid overly long responses.

- Temperature

- Sampling

- Top K Sampling

- Determines the number of tokens considered at each stage.

- Low values (5-20) : More restrictive, more consistent responses.

- High values (40-100) : More diversity in responses.

- Repeat Penalty

- Penalizes repetition of words to avoid loops in responses.

- Recommended value : 1.1 – 1.2 for good balance.

- Top P Sampling

- Reduce the range of selected tokens, keeping only those with a probability sum of 95%.

- Recommended value : 0.9 – 0.95 for fluent text.

- Min P Sampling

- Adjusts the minimum probability of a token to be selected.

- Recommended value : 0.01 – 0.05.

- Top K Sampling

- Structured Output

- JSON Schema : Enable this option to force a structured output format (e.g. JSON). Useful for automatic integrations or data analysis.

How to choose the right model for your equipment

To optimize the use of DeepSeek-R1 according to your hardware capabilities, here’s a detailed table with recommendations for Windows and macOS configurations.

| Model | Parameters | RAM | RecommendedGPU/VRAM | ||

|---|---|---|---|---|---|

| DeepSeek-R1 1.5B | 1.5 billion | 8 GB | NVIDIA GTX 1660 (6 GB VRAM) | MacBook Air M2/M3 with 8 GB RAM | Ideal for light tasks and initial testing. |

| DeepSeek-R1 7B | 7 billion | 16 GB | NVIDIA RTX 3060 (12 GB VRAM) | MacBook Pro M2/M3 with 16 GB RAM | Good balance between performance and resources for moderate uses. |

| DeepSeek-R1 8B | 8 billion | 16 GB | NVIDIA RTX 3070 (8 GB VRAM) | MacBook Pro M2/M3 with 16 GB RAM | Slightly more powerful than the 7B model, suitable for more complex tasks. |

| DeepSeek-R1 14B | 14 billion | 32 GB | NVIDIA RTX 3080 (10 GB VRAM) | MacBook Pro M2/M3 Pro with 32 GB RAM | Suitable for applications requiring high precision and extended contexts. |

| DeepSeek-R1 32B | 32 billion | 64 GB | NVIDIA RTX 4090 (24 GB VRAM) | Mac Studio M2 Max/Ultra | For intensive use and massive data processing needs. |

| DeepSeek-R1 70B | 70 billion | 128 GB | NVIDIA A100 (40/80 GB VRAM) | Mac Studio M2 Ultra | Reserved for high-end configurations for maximum performance and advanced analysis. |

Additional notes

- Quantification of models: Using quantized versions of models (e.g. 4-bit) can reduce memory and VRAM requirements, allowing larger models to be run on less powerful hardware configurations.

- Performance on Windows: Windows users should ensure that their GPU is compatible and has the necessary VRAM for the chosen model. Recent NVIDIA graphics cards, such as the RTX 30 and 40 series, are generally recommended for optimum performance.

- Mac configurations: Macs equipped with Apple Silicon chips (M1, M2, M3, M4) with the specified amount of RAM can run the corresponding models. Larger models require more robust configurations, such as the Mac Studio with M2 Ultra chip.

- Use Metal acceleration: For macOS users equipped with Apple Silicon chips, enabling Metal acceleration in LM Studio can improve performance by making the most of your Mac’s hardware capabilities.

Useful references

- LM Studio: LM Studio official website to download the application and access installation guides.

- Documentation DeepSeek-R1: Consult the official DeepSeek resources for detailed technical information on the models and their configurations.

- Forums and communities: Join forums like Reddit r/MachineLearning for advice and feedback from other users.

Following these recommendations will help you choose the DeepSeek-R1 model best suited to your hardware, guaranteeing optimal performance for your specific artificial intelligence needs.

FAQ: Install DeepSeek-R1 locally with LM Studio

If you’re on the fence about installing DeepSeek-R1 locally with LM Studio, here’s a FAQ that answers common questions and helps you make an informed decision.

Why should I install DeepSeek-R1 locally rather than using a cloud service?

Installing DeepSeek-R1 locally gives you total control over your data, guaranteeing confidentiality and security. You avoid the recurring costs associated with cloud APIs and benefit from faster response times thanks to the absence of network latency.

What are the advantages of DeepSeek-R1 over other language models?

DeepSeek-R1 is an open-source model optimized for advanced performance in natural language processing. It is available in different sizes, allowing it to be adapted to the available hardware resources. What’s more, it’s customizable and can be adjusted for specific needs.

Is my hardware sufficient to run DeepSeek-R1?

Hardware requirements vary depending on the size of the model you wish to use. For smaller models (like 7B), a machine with 16GB RAM and a graphics card like the NVIDIA RTX 3060 is sufficient. For larger models, more robust configurations are required. See the mapping table for details.

Is it difficult to install and configure LM Studio?

No, installing LM Studio is simple. Just download the application from the official website and follow the instructions specific to your operating system. Configuration is intuitive, with preset options for different use cases.

Can I customize the template responses?

Yes, LM Studio lets you customize model responses by adjusting various parameters such as temperature, top-k sampling, and using system prompts. You can save these configurations for future use.

What kinds of tasks can DeepSeek-R1 perform?

DeepSeek-R1 excels at a variety of tasks such as writing, programming, text analysis, and conversational assistance. It can also be used for technical analysis and creative generations.

How can I ensure that my data remains private?

By running DeepSeek-R1 locally, your data never leaves your machine. This eliminates the risk of data leakage associated with cloud services and guarantees total confidentiality.

Is LM Studio compatible with my operating system?

LM Studio is compatible with Windows, macOS and Linux. You can download the appropriate version for your operating system from the official website.

Can I use DeepSeek-R1 without in-depth technical knowledge?

Yes, LM Studio is designed to be user-friendly, even for non-technical users. The intuitive interface and preset options make it easy to configure and use the model without requiring advanced skills.

Is there a community or support for LM Studio and DeepSeek-R1?

Yes, you can find support and advice by participating in forums like Reddit r/MachineLearning or by consulting the official documentation for LM Studio and DeepSeek-R1. The community is active and can provide valuable feedback.

Related Articles

AGI 2026-2027: The 6 Opposing Visions of Altman, Musk, Amodei, Zuckerberg, LeCun, and Hassabis

Six leaders, six colossal fortunes, six irreconcilable visions of what artificial general intelligence (AGI) will become. Who is right? The answer to this question is worth trillions of dollars and…

Genie 3: The world model that generates interactive 3D environments

Google DeepMind has just made a major breakthrough with Genie 3, its new generative world model. Forget about passive AI-generated videos—here, we’re talking about interactive 3D worlds created in real…