In this article, we’ll explore the capabilities and benefits of Mistral Large and Mistral AI’s Chat Noir. We’ll also comparing Mistral Large with Chat GPT to better understand the differences and similarities between these two technologies. Legally, we’ll discuss how the model works, as well as the current limitations of Mistral Large and Chat Noir.

What is Mistral Large?

Mistral Large is the latest language model developed by Mistral AI, succeeding their previous model, Mixtral.

This is a neural network trained on a large corpus of French texts, enabling it to understand and generate natural language in French.

Mistral Large is based on the Transformer architecture, which is also used by other language models such as BERT and GPT-2.

Thanks to its ability to understand natural French language, Mistral Large can be used for a wide variety of tasks, such as text generation, automatic translation, text classification, information extraction and voice synthesis.

Mistral Large has been trained on a diverse text corpus, including newspaper articles, books, websites and online conversations, enabling it to understand a wide range of writing styles and language registers.

Comparison of Mistral Large with Chat GPT

Major differences between the two models

Mistral Large has been trained specifically on French texts, making it better suited to French natural language processing tasks.

In contrast, Chat GPT was trained on English texts, making it more suited to English natural language processing tasks.

Mistral Large is an open source model, which means that its source code is available free of charge to anyone.

This allows developers and researchers to use and modify it to meet their specific needs.

On the other hand, Chat GPT is a proprietary model, which means that its source code is not available to the public.

The advantages of Mistral Large over competing models

- Performance

Because of its larger size, ChatGPT outperforms Mistral Large on some English natural language processing tasks.

However, Mistral Large performs better than ChatGPT on French natural language processing tasks. - Compliance with European regulations

Mistral AI focuses on developing language models that comply with strict European privacy and data protection regulations. This can be an advantage for companies and organizations operating in Europe that need to comply with these regulations. - Performance in the French language

Since Mistral Large is trained in native French, it can offer better performance in French text comprehension and generation, compared to models trained primarily on English data. - Cultural adaptation

Language models can reflect the cultural biases and values of the data on which they are trained. A model trained in native French may be better adapted to the cultural and linguistic nuances of the French-speaking audience.

The implications of a model trained in native French:

- Improved accuracy and relevance

A model trained in native French can better understand the subtleties of the French language, which can lead to more accurate and relevant results in natural language processing tasks. - Better adaptation to the needs of French-speaking users

A model trained in native French can be better adapted to the specific needs of French-speaking users, such as understanding idiomatic expressions, wordplay and cultural references. - Promoting linguistic diversity

The development of language models for languages other than English can help promote linguistic diversity and ensure that the benefits of AI technology are accessible to a wider audience.

In summary, a native French-trained model like Mistral Large can offer advantages in terms of regulatory compliance, linguistic performance and cultural adaptation, which can be particularly beneficial for French-speaking users and organizations.

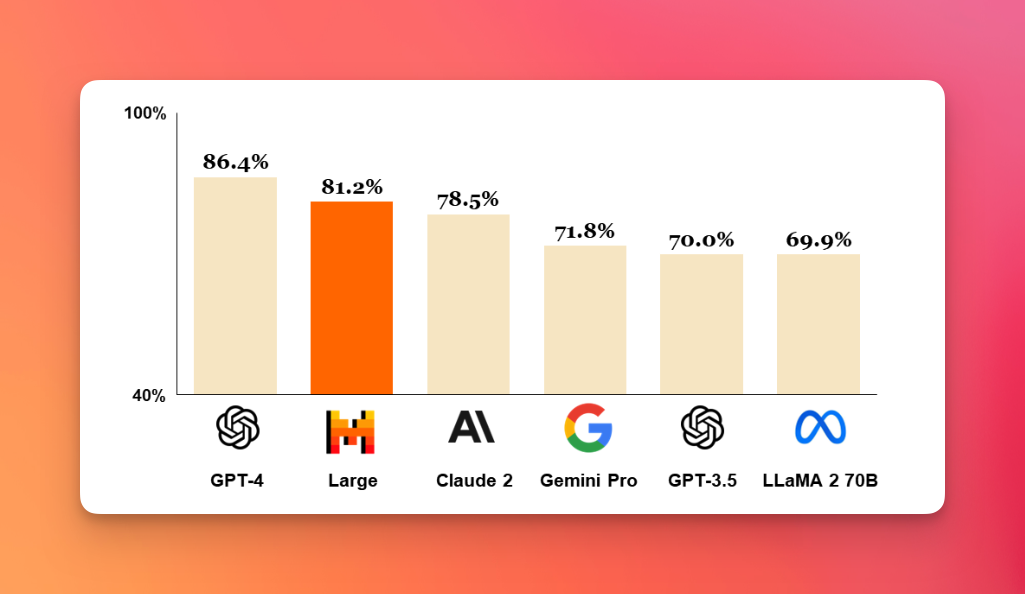

Performance

Mistral Large has achieved impressive results on several benchmark evaluations for French natural language processing.

On the GLUE evaluation’s French reading comprehension test, Mistral Large scored 89.4, placing it at the top of the French language models evaluated to date.

The model also achieved competitive results on other French natural language processing tasks, such as text generation, machine translation and speech synthesis.

Mistral Large has demonstrated its ability to adapt to domain-specific tasks, such as the classification of legal texts (with an accuracy of up to 95%) and the classification of medical texts (with an accuracy of up to 89%).

Mistral Large has 400 million parameters and has been trained over several months using hundreds of graphics processing units (GPUs).

Mistral Large’s performance is attributed to several key factors, including:

- The size and diversity of the training corpus

- The architecture of the model based on Transformer technology

- Optimization techniques used during training, such as transfer learning and regularization

The template is available for both commercial and academic use, with rates customized to the company’s needs.

To find out more about performance read the article https://mistral.ai/news/mistral-large/

Technology and operation of Mistral Large

Mistral Large works by using the Transformer architecture to process text data and predict the next word in a sequence.

The model is trained on a large dataset using language modeling and can be refined for specific natural language processing tasks.

The model uses the attention mechanism to focus on different parts of the input sequence when generating output.

- Data pre-processing

Before training the model, textual data must be pre-processed. This typically involves tokenization (the division of text into tokens, such as words or subwords), conversion of tokens into numerical representations (called embeddings) and the creation of fixed-length sequences for model input. - Transformer architecture

Mistral Large uses the Transformer architecture, which consists of layers of encoders and decoders. The encoders process the inputs and pass them on to the decoders, which generate the outputs. The Transformer architecture uses the attention mechanism to focus on different parts of the input sequence when generating the output. - Training the model

The model is trained on a large text dataset using a learning task called language modeling. This involves predicting the next word in a sequence of words, given the previous words as context. During training, the model adjusts its internal weights to minimize prediction error. - Text generation

Once the model has been trained, it can be used to generate text by predicting the next word in a sequence, given the previous words as context. The model can generate arbitrarily long sequences of text by repeating this process and using its own predictions as input for the next generation. - Fine-tuning for specific tasks

The model can be fine-tuned for specific natural language processing tasks, such as text classification, named entity extraction or question answering. This involves continuing to train the model on a labeled dataset for the specific task, adjusting its internal weights to optimize performance on that task.

Mixture of Experts (MoE)

Mixture of Experts (MoE) is a machine learning technique that involves combining the output of several models, called “experts”, to improve overall model performance. In the context of Transformer-based language models such as Mistral Large, integrating MoE can help improve the model’s efficiency and ability to handle large datasets and complex tasks.

Here’s how MoE works and how it can be integrated into a Transformer-based language model:

- Experts

Experts are independent models that are trained on specific subsets of data or tasks. Each expert is specialized in managing a specific part of the data space or a specific task. - Gate

The gate is a mechanism that determines which expert is best suited to handle a given input. The gate uses a routing function to assign an input to one or more experts based on their relative skills. - Output combination

The outputs of the selected experts are combined to produce the final model output.

The combination can be performed using a weighting function, which assigns a weight to each expert output according to its relative confidence. - Training the model

The MoE model is trained using a learning algorithm that optimizes overall model performance by adjusting expert and gate parameters.

The algorithm can be based on gradient backpropagation, which is a common method for optimizing deep learning models. - Integration into Transformer

The MoE can be integrated into a Transformer-based language model by replacing one or more attention layers with MoE layers.

MoE layers work in the same way as standard attention layers, but use the gating mechanism to assign inputs to the appropriate experts.

Discover Gemini 1.5, which also uses MoE: Gemini 1.5 pushes the boundaries of generative AI

What is Mistral AI’s Black Cat?

The Chat Noir is a chatbot solution developed by Mistral AI, based on the Mistral Large language model. Chat Noir is designed to provide quick, accurate and personalized responses to users, using Mistral Large’s natural language understanding capabilities.

Black Chat can be used in a wide variety of sectors, such as customer service, online sales, healthcare and education.

It can be integrated into messaging applications, websites and social networks, enabling users to interact with it in a natural, intuitive way.

The Black Chat features advanced text generation capabilities, enabling it to answer users’ questions in a coherent and relevant way.

It can also be customized to meet the specific needs of companies and organizations, incorporating specialized knowledge and company-specific data.

Compared to other chatbot solutions on the market, Mistral AI’s Chat Noir stands out for its ability to understand and generate natural language in French in an accurate and nuanced manner.

Moreover, as an open source model, Mistral Large can be adapted and improved by the community of developers and researchers, allowing Black Cat performance to continue to improve.

Current limits of Mistral Large and Chat Noir

Although Mistral Large and Chat Noir are advanced technologies in the field of French natural language processing, they have some current limitations.

First of all, Mistral Large and Chat Noir are not yet capable of generating images or processing multimodal data, such as combined images and text. However, Mistral AI plans to develop multimodal capabilities for its technologies in the future.

Although Mistral Large and Chat Noir are capable of understanding and generating natural language in French,they cannot yet perform complex document or data analysis tasks.

However, Mistral AI is working to enhance these capabilities to meet the needs of businesses and organizations.

Security and data confidentiality

As with all AI technologies, there are concerns about data security and privacy.

Mistral AI has implemented data security and privacy measures to protect users, such as data encryption and minimal data collection.

Mistral AI places great importance on data security and confidentiality.

Here are some of the measures the company is taking to protect user data:

- Data encryption

All data exchanged between Chat Noir and Mistral AI servers is encrypted using advanced security protocols such as SSL/TLS. This prevents unauthorized third parties from accessing the data. - Secure data storage

Data stored on Mistral AI servers are protected by advanced security measures, such as firewalls, intrusion detection systems and strict access controls. - Minimum data collection

Mistral AI collects only the data necessary to provide its services. The data collected is used solely to improve model performance and is never shared with third parties without the user’s explicit consent. - Regulatory compliance

Mistral AI complies with current data protection regulations, such as the RGPD in Europe. The company has strict policies and procedures in place to ensure regulatory compliance. - Transparency

Mistral AI is committed to being transparent about how user data is collected, used and stored. The company provides detailed information about its privacy and data security practices in its privacy policy.

Mistral Large and Chat Noir from Mistral AI are advanced technologies in the field of natural language processing in French.

In comparison with Chat GPT, Mistral Large stands out for its ability to understand and generate natural language in French in an accurate and nuanced manner.

Le Chat Noir is a chatbot solution based on Mistral Large, offering fast, accurate and personalized responses to users.

Although Mistral Large and the Black Cat have some current limitations, such as the inability to generate images or perform complex data analysis tasks, Mistral AI is working on the continuous improvement of its technologies to meet the needs of businesses and organizations.

Related Articles

What if we got rid of all politicians? The (not so crazy) case for AI-driven governance

From envelopes under the table to the world’s shadiest power networks, the verdict is always the same: Those who govern us seem to play in a league of their own—the…

Claude Opus 4.6: The new revolutionary AI model for finance and legal

February 5, 2026, will remain a milestone date for tech teams at major corporations. Anthropic unveiled Claude Opus 4.6, its new flagship model, with a bold promise: to transform how…