OmniHuman, developed by researchers at Bytedance, is a revolutionary AI model that transforms a simple image and motion signal (audio or video) into realistic human videos.

This technological feat opens up unprecedented prospects for animation, entertainment and many other sectors.

Listen to the AI podcast :

How OmniHuman works (A multimodal approach)

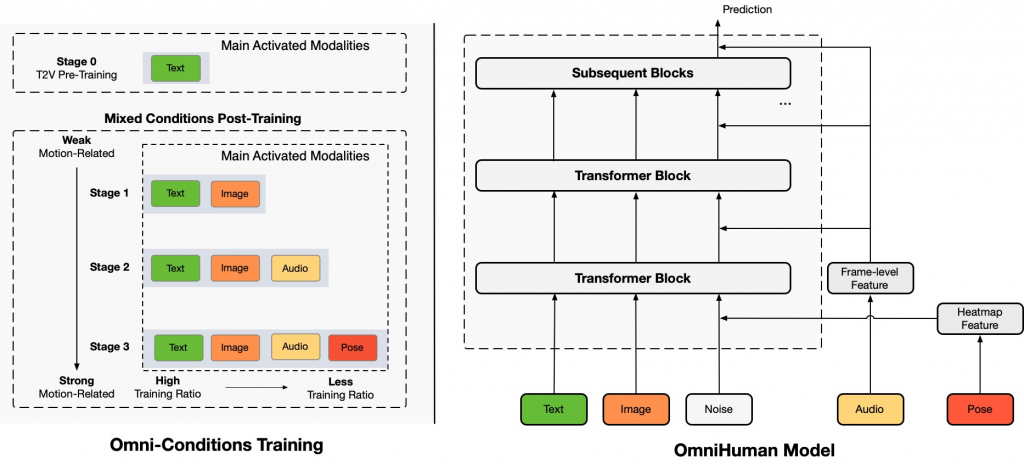

At the heart of OmniHuman lies a multimodal conditioning approach, meaning that the model can integrate different types of input to create a video.

Concretely, the process takes place in several stages:

- Input: The user starts with a single image of a person, which can be a photo, a drawing or even a cartoon character. This image can be a portrait, a bust shot or a full-length image, demonstrating OmniHuman’s great adaptability.

- Motion Signal: Next, a motion signal is added. This can be an audio clip, such as a person talking or singing, or a video that provides information about the movements to be reproduced.

- Processing: OmniHuman uses a technique called “multimodal motion conditioning” to interpret motion signals and transpose them into believable human movements. It takes into account the rhythm, style and nuances of the signals to generate realistic gestures, facial expressions and body movements.

- Output: The end result is a high-quality video where the person in the image appears to perform the actions or movements dictated by the motion signal. OmniHuman excels at handling weak signals, such as audio alone, and produces impressive results.

OmniHuman stands out for its ability to generate high-quality, ultra-realistic videos,even from weak input signals, thanks to a mixed training strategy that uses a variety of training data.

This allows the model to overcome the limitations encountered by previous approaches.

AI can generate videos with natural movements, precise gestures and meticulous attention to detail.

Furthermore, it supports different visual and audio styles, making it highly versatile for various types of content.

OmniHuman’s never-before-seen capabilities: Much more than just animation

OmniHuman doesn’t just generate basic movements.

His capabilities include:

Management of various types of entries

It supports portraits, head-and-shoulders shots and full-length images, with the ability to animate cartoon characters, animals and even man-made objects.

The AI adapts movements according to each subject’s own style.

Vocal and gestural animation

OmniHuman excels at lip-syncing and gesture handling, creating strikingly realistic talking avatars. It can generate facial expressions and body movements that match the rhythm and style of a song.

Compatibility with various motion signals

In addition to audio, OmniHuman can be guided by video signals, enabling specific actions to be reproduced. It is also possible to combine audio and video for more precise control of animated body parts.

For example, we can use a video of a person dancing to make the person dance on the starting image.

Diversity of use scenarios

OmniHuman is able to support a variety of musical styles, body poses and singing forms, including songs with high pitches.

It can also handle multiple languages. In addition, the AI can animate details such as the movement of jewelry or accessories in accordance with the person’s movement.

Image quality

The quality of the videos generated depends largely on the quality of the reference image. The results produced by OmniHuman are impressively realistic with very high visual consistency, including tooth movement, breathing, facial expressions and gestures.

Potential applications for OmniHuman: A transformative impact

The implications of OmniHuman are vast, extending far beyond entertainment. Here are just a few examples of potential applications:

- Entertainment: Creation of personalized avatars for video games and films, production of animated content, and development of hyper-realistic virtual characters. AI can be used to create animated films with AI-generated actors.

- Education: Creation of teacher avatars for e-learning, animation of historical characters for immersive courses, and development of captivating educational content.

- Communication: Improving online communication with expressive avatars, creating avatars for virtual meetings and developing personalized communication content.

- Health: Creation of therapeutic animations for patients, development of communication tools for people with expression difficulties, and help with training healthcare staff.

- Commerce: Development of personalized shopping experiences, creation of avatars for customer service, and production of innovative advertising.

OmniHuman represents a significant breakthrough in human video generation with AI, offering unprecedented realism and flexibility.

It’s capable of handling complex poses and varied scenarios such as holding a glass.

Omnihuman versus the competition: One step ahead

Although other AI models exist for animating faces or bodies, OmniHuman stands out for its realism and versatility.

By comparing its performance with models such as Microsoft’s VASA-1 or Echo mimic V2, OmniHuman achieves better results, particularly in terms of animation quality.

Performance tests show that OmniHuman outperforms its competitors for both portrait and full-body animation.

OmniHuman has already been the subject of a technical publication detailing its architecture and training method. https://omnihuman-lab.github.io/

The ethical challenges and responsibility of Bytedance

With such power, ethical concerns emerge.

The ease with which OmniHuman can create realistic videos raises questions about deepfakes, misinformation and identity theft.

The creators of OmniHuman are aware of these challenges and are committed to an ethical approach to AI.

Images and audios used in demonstrations are publicly sourced or template-generated for research purposes. The team is open to concerns and ready to remove any content that raises an ethical issue.

The tool must be used responsibly and with an awareness of the ethical implications of creating realistic human animations.

The Future of OmniHuman: Accessibility and Perspectives

OmniHuman is currently in the research phase and not yet available to the public.

However, the demos shared on their GitHub page give a glimpse of its potential.

It’s possible that the code will be released soon, paving the way for further exploration and experimentation. Although it’s unclear when it will be available, the researchers have shared a technical document explaining the details of its formation.

OmniHuman and video AI: A Revolution in Progress

OmniHuman marks a turning point in video creation with AI.

His ability to generate realistic human videos from a single image and a motion signal is impressive and promising.

Whether for entertainment, education, communication, or other sectors, OmniHuman is more than just a tool: it’s a glimpse into the future of human animation.

FAQ

- What is OmniHuman and how does it work? OmniHuman is an AI model that generates realistic videos from an image and a motion signal (audio or video). It uses multimodal conditioning to transform these inputs into realistic animations.

- What types of input can OmniHuman process? OmniHuman can process a single image (portrait, bust shot, full-length image, drawing or cartoon character), as well as an audio or video motion signal.

- Is OmniHuman limited to human animation? No, OmniHuman can also animate cartoon characters, animals and even artificial objects, adapting movements to the characteristics of each subject.

- How does OmniHuman handle gestures and lip-synchronization? OmniHuman excels at lip-synchronization and gesture management. It can create facial expressions and body movements that match the rhythm and style of the motion signal.

- What are the main differences between OmniHuman and other animation tools? OmniHuman stands out for its realism, versatility, ability to handle weak signals such as audio alone, and superior performance to other tools such as VASA-1 or Echo mimic V2.

- What are the potential applications for OmniHuman?The applications are vast: entertainment (games, films), education (teacher avatars, immersive courses), communication (virtual meetings), healthcare (therapeutic animations), and commerce (personalized shopping experiences).

- Is OmniHuman available to the public? No, OmniHuman is currently in the research phase and not yet available to the public. It is possible that its code will be published soon.

- What are the ethical concerns associated with OmniHuman? The ability to create realistic videos raises questions about deepfakes, misinformation and identity theft. Developers are committed to an ethical approach to AI.

- How do the developers handle copyright and confidentiality issues? Images and audios used in demonstrations are publicly sourced or model-generated, and are used for research purposes only. The team is ready to remove any content that raises ethical concerns.

- How can I stay informed about OmniHuman developments? It’s recommended to follow the developers’ GitHub page, where they share updates and research progress.

AI NEWSLETTER

Stay on top of AI with our Newsletter

Every month, AI news and our latest articles, delivered straight to your inbox.

CHATGPT prompt guide (EDITION 2024)

Download our free PDF guide to crafting effective prompts with ChatGPT.

Designed for beginners, it provides you with the knowledge needed to structure your prompts and boost your productivity

With this ebook, you will:

✔ Master Best Practices

Understand how to structure your queries to get clear and precise answers.

✔ Create Effective Prompts

The rules for formulating your questions to receive the best possible responses.

✔ Boost Your Productivity

Simplify your daily tasks by leveraging ChatGPT’s features.

Similar posts

Sora: How OpenAI will revolutionize video production

With the advent of AI,the way we create videos is about to undergo a radical transformation. OpenAI, known for its spectacular advances in AI with tools such as ChatGPT and …

5 AI tools that will change video creation in 2024

These innovative tools enable a simplification of the creative process, a reduction in production costs, and open the door to creative possibilities previously unimaginable. Among the most notable advances, some …

Meta Movie Gen : Meta’s secret weapon for AI video generation

It wasn’t expected, after the launch of Llama 3, Meta has just unveiled Movie Gen, an impressive AI-based video creation and editing tool. Movie Gen pushes back the standards in …